Overview:

A week or so ago I had a faculty member at UNT ask if I could work with one of his students to get a copy of the OCR text of several titles of historic Texas newspapers that we have on The Portal to Texas History.

While we provide public access to the full-text for searching and discovering newspapers pages of interest to users, we don’t have a very straightforward way to publicly obtain the full-text for a given issue let along full titles that may be many tens of thousands of pages in size.

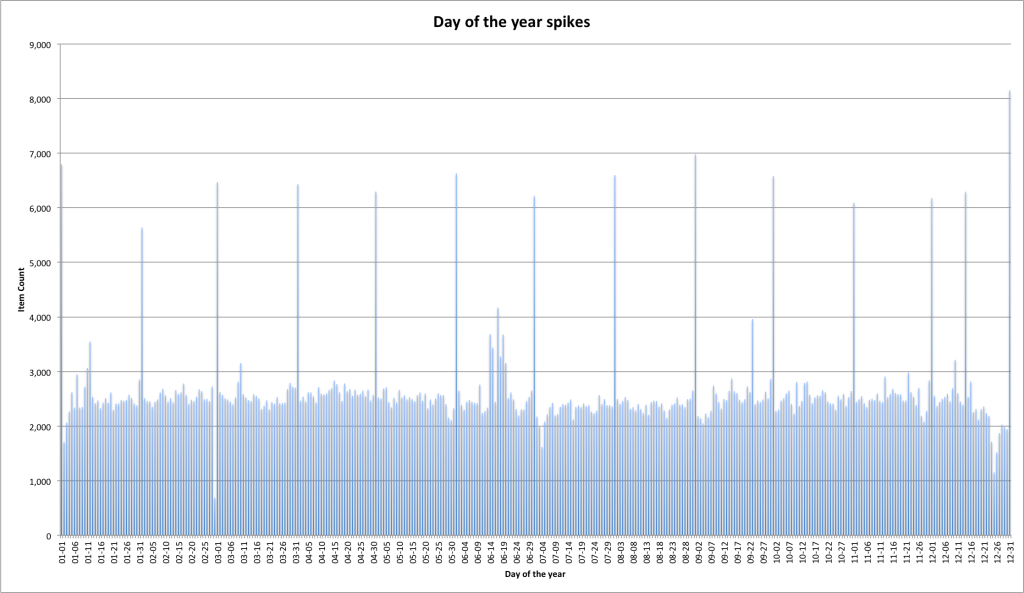

At the end of the week I had pulled roughly 79,000 issues of newspapers comprised of over 785,000 pages of OCR text. We are making these publicly available in the UNT Data Repository under a CC0 License so that others might be able to make use of them. Feel free to jump over to the UNT Digital Library to grab a copy.

Background:

The UNT Libraries and The Portal to Texas History have operated the Texas Digital Newspaper Program for nine years with a goal of preserving and making available as many newspapers published in Texas as we are able to collect and secure rights to. At this time we have nearly 3.5 million pages of Texas newspapers ranging from the 1830’s all the way to 2015. Jump over to the TDNP collection in the Portal to take a look at all of the content there including a list of all of the titles we have digitized.

The titles in the datasets were chosen by the student and professor and seem to be a fairly decent sampling of communities that we have in the Portal that are both large in size and have a significant number of pages of newspapers digitized.

Here is a full list of the communities, page count, issue count, and links to the dataset itself in the UNT Digital Library.

Dataset Layout

Each of the datasets is a gzipped tar file that contains a multi-level directory structure. In addition there is a README.txt created for each of the datasets. Here is an example of the Denton README.txt

Each of the datasets is organized by title. Here is the structure for the Denton dataset.

Denton

└── data

├── Denton_County_News

├── Denton_County_Record_and_Chronicle

├── Denton_Evening_News

├── Legal_Tender

├── Record_and_Chronicle

├── The_Denton_County_Record

└── The_Denton_Monitor

Within each of the title folders are subfolders for each year that we have a newspaper issue for.

Denton/data/Denton_County_Record_and_Chronicle/ ├── 1898 ├── 1899 ├── 1900 └── 1901

Finally within each of the year folders contain folders for each issue present in The Portal to Texas History on the day the dataset was extracted.

Denton

└── data

├── Denton_County_News

│ ├── 1892

│ │ ├── 18920601_metapth502981

│ │ ├── 18920608_metapth502577

│ │ ├── 18920615_metapth504880

│ │ ├── 18920622_metapth504949

│ │ ├── 18920629_metapth505077

│ │ ├── 18920706_metapth501799

│ │ ├── 18920713_metapth502501

│ │ ├── 18920720_metapth502854

Each of these issue folders has the date of publication in the yyyymmdd format and the ARK identifier from the Portal for the folder name.

Each of these folders is a valid BagIt bag that can be verified with tools like bagit.py. Here is the structure for an issue.

18921229_metapth505423 ├── bag-info.txt ├── bagit.txt ├── data │ ├── metadata │ │ ├── ark │ │ ├── metapth505423.untl.xml │ │ └── portal_ark │ └── text │ ├── 0001.txt │ ├── 0002.txt │ ├── 0003.txt │ └── 0004.txt ├── manifest-md5.txt └── tagmanifest-md5.txt

The OCR text is located in the text folder and three metadata files are present in the metadata folder. A file called ark that contains the ark identifier for this item. There is a file called portal_ark that contains the URL to this issue in The Portal to Texas History, and finally a metadata file in the UNTL metadata format.

I hope that these datasets are useful to folks interested in trying their hand at working with a large collection of OCR text from newspapers. I should remind everyone that this is uncorrected OCR text and will most likely need a fair bit of pre-processing because it is far from perfect.

If you have questions or comments about this post, please let me know via Twitter.