When we created our digital library infrastructure a few years ago, one of the design goals of the system was that we would create write once digital objects for the Archival Information Packages (AIPs) that we store in our Coda repository.

Currently we store two copies of each of these AIPs, one locally in the Willis Library server room and another copy in the UNT System Data Center at the UNT Discovery Park research campus that is five miles north of the main campus.

Over the past year we have been working on a self-audit using the TRAC Criteria and Checklist as part of our goal in demonstrating that the UNT Libraries Digital Collections is a Trusted Digital Repository. In addition to this TRAC work we’ve also used the NDSA Levels of Preservation to help frame where we are with digital preservation infrastructure, and were we would like to be in the future.

One of the things that I was thinking about recently is what it would take for us to get to Level 3 of the NDSA Levels of Preservation for “Storage and Geographic Location”

“At least one copy in a geographic location with a different disaster threat”

In thinking about this I was curious what the lowest cost would be for me to get this third copy of my data created, and moved someplace that was outside of our local disaster threat area.

First some metrics

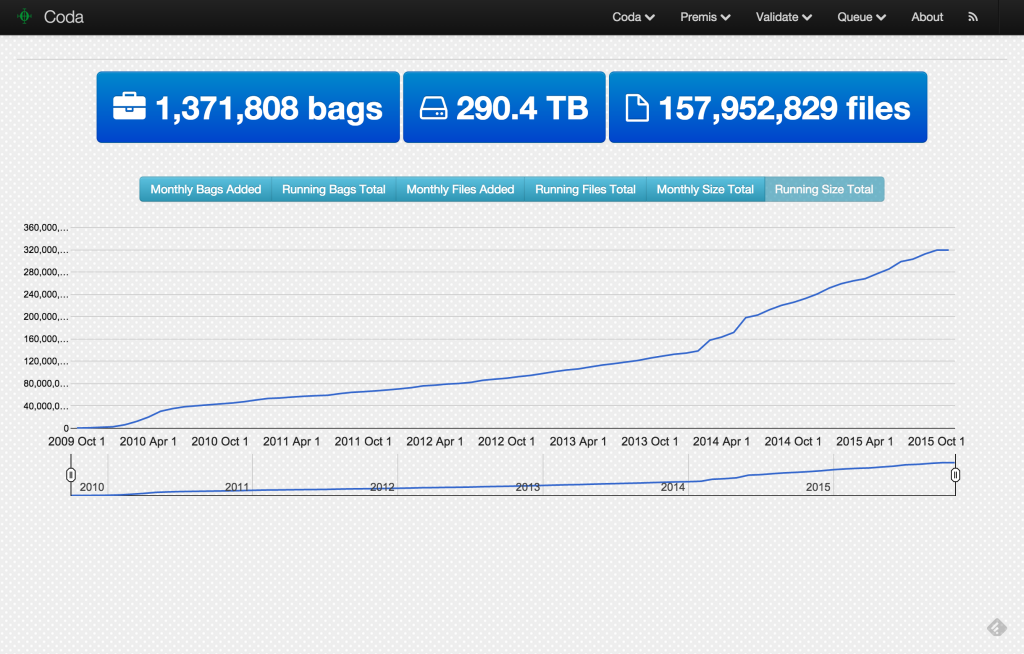

The UNT Libraries’ Digital Collections has grown considerably over the past five years that we’ve had our current infrastructure.

As of this post, we have 1,371,808 bags of data containing 157,952,829 file in our repository, taking up 290.4 TB of storage for each copy we keep.

As you can see by the image above, the growth curve has changed a bit starting in 2014 and is a bit steeper than it had been previously. From what I can tell it is going to continue at this rate for a while.

So I need to figure out what it would cost to store 290TB of data in order to get my third copy.

Some options.

There are several options to choose from for where I could store my third copy of data, I could store my data with a service like Cronopolis, MetaArchive, DPN, or DuraSpace to name a few. These all have different cost models and different services, and for what I’m interested in accomplishing with this post and my current musing, these solutions are overkill for what I want.

I could use either a cloud based service like Amazon Glacier, or even work with one of the large high performance computing facilities like TACC at the University of Texas to store a copy of all of my data. This is another option but again not something I’m interested in musing about in this post.

So what is left? Well I could spin up another rack of storage, put our Coda repository software on top of it and start replicating my third copy, but the problem is getting it in a rack that is several hundred miles away, UNT doesn’t have any facilities in locations outside of the DFW area so that is out of the question.

So finally I’m leaving myself to think about tape infrastructure, and specifically about getting an LTO-6 setup to spool a copy of all of our data to and then send those tapes off to a storage facility, possibly something like the TSLAC Records Management Services for Government Agencies.

Spooling to Tape

So in this little experiment I was interested in finding out how many LTO-6 tapes it would take to store the UNT Libraries Digital Collections. I pulled a set of data from Coda that contained the 1,371,808 bags of data and the size of each of those bags in bytes.

The uncompressed capacity of LTO-6 tape is 2.5 TB so some quick math says that it will take 116 tapes to write all of my data. This is probably low because that would assume that I am able to completely fill each of the tapes with exactly 2.5 TB of data.

I figured that there were going to be at least three ways for me to approach distributing digital objects to disk, they are the following:

- Write items in the order that they were accessioned

- Write items in order from smallest to largest

- Fill each tape to the highest capacity before moving to the next

I wrote three small python scripts that simulated all three of these options to find the number of tapes needed as well as the overall storage efficiency of that method. I decided I would only fill a tape with 2.4 TB of data to give myself plenty of wiggle room. Here are the results

| Method | Number of Tapes | Efficiency |

| Smallest to Largest | 136 | 96.91% |

| In order of accession | 136 | 96.91% |

| Fill a tape completely | 132 | 99.85% |

In my thinking, the simplest way of writing objects to tape would be to order the objects by their accession date, write files to a tape until it is full, when it is full start writing to another tape.

If we assume that a tape costs $34 dollars, the overhead of this less efficient but simplest way of writing is only an overhead of $116 dollars which to me is completely worth it. This way, in the future I could just continue to write tapes as new content gets ingested by just picking up where I left off.

So from what I can figure from my poking around on Dell.com and various tape retailers, I’m going to be out roughly $10,000 for my initial tape infrastructure that would include a tape autoloader and a server to stage files to from our Coda repository. I would have another cost of $4,352 to get my 136 LTO-6 tapes to accommodate my current 290 TB of data in Coda. If I assume a five year replacement rate for this technology (so that I can spread the initial costs out over five years) that will leave me with a cost of just about $50 per-TB, if I divide that over the five year lifetime of the technology, that’s $10 per-TB-per-year.

If you like GB prices better I’m coming up with $.01 cents per-GB or $.002 cents per-GB-per-year cost.

If I was going to use Amazon Glacier (calculations are with an unofficial Amazon Glacier calculated and assume a whole bunch of things that I’ll gloss over related to data transfer) I come up with a cost of $35,283.33 per year instead of my roughly calculated $2870.40 per year. (I realize that these cost comparison aren’t for the same service and Glacier includes extra redundancy, but you get the point I think)

There is going to be another cost associated with this which is the off-site storage of 136 LTO-6 tapes. As of right now I don’t have any idea of those costs but assume that it could be done anywhere from very cheaply as part of an MOU with another academic library for little or no cost, or something more costly like a contract with a commercial service. I’m interested to see if UNT would be able to take advantage of the services offered by TSLAC and their Records Management Services.

So what’s next?

I’ve had fun musing about this sort of thing for the past day or so. I have zero experience with tape infrastructure and from what I can tell it can get as cool and feature rich as you are willing to pay. I like the idea of keeping it simple so if I can work directly with a tape autoloader with some command line tools like tar and mt, I think that is what I would prefer.

Hope you enjoyed my musings here, if you have thoughts, suggestions, or if I missed something in my thoughts, please let me know via Twitter.